When you open your music streaming app today, some of what you’re hearing was created with help from artificial intelligence. AI music generation has quietly moved from science fiction to reality, giving both professionals and hobbyists new ways to create.

Instead of spending hours arranging notes or programming beats, musicians can now type a few words and watch as AI crafts complete compositions in seconds. Whether it’s Google’s MusicLM turning text into music or Meta’s MusicGen building songs from simple prompts, these tools are changing how music is created.

But how exactly do these AI music generators work? What can they really do? And what does this mean for human creativity?

Let’s explore in detail.

What are AI Music Generation Models?

AI music generation models are specialized software systems that create music from scratch using artificial intelligence. Think of them as musical robots that have listened to thousands of hours of songs to learn patterns, structures, and styles.

These models work by taking your input—like a text description (“upbeat jazz with piano”) or even a hummed melody—and turning it into complete musical pieces. Some focus on creating instrumental tracks, while others can generate full songs with vocals and lyrics.

Unlike traditional music production tools that require you to arrange every note manually, AI models handle the technical work for you. They understand musical concepts such as harmony, rhythm, and genre conventions, applying these principles to create compositions that sound like they could have been created by humans.

The technology behind these models ranges from autoregressive systems, which predict notes one after another, to diffusion models, which gradually refine random noise into coherent music. Whatever the approach, the goal remains the same: to make music creation more accessible to everyone, regardless of musical training.

Top AI Music Generation Models in 2025

The music production landscape has changed dramatically, with AI tools now capable of creating everything from simple melodies to complete compositions.

Here are the leading AI music generation models that are reshaping how we create music in 2025:

MusicLM by Google

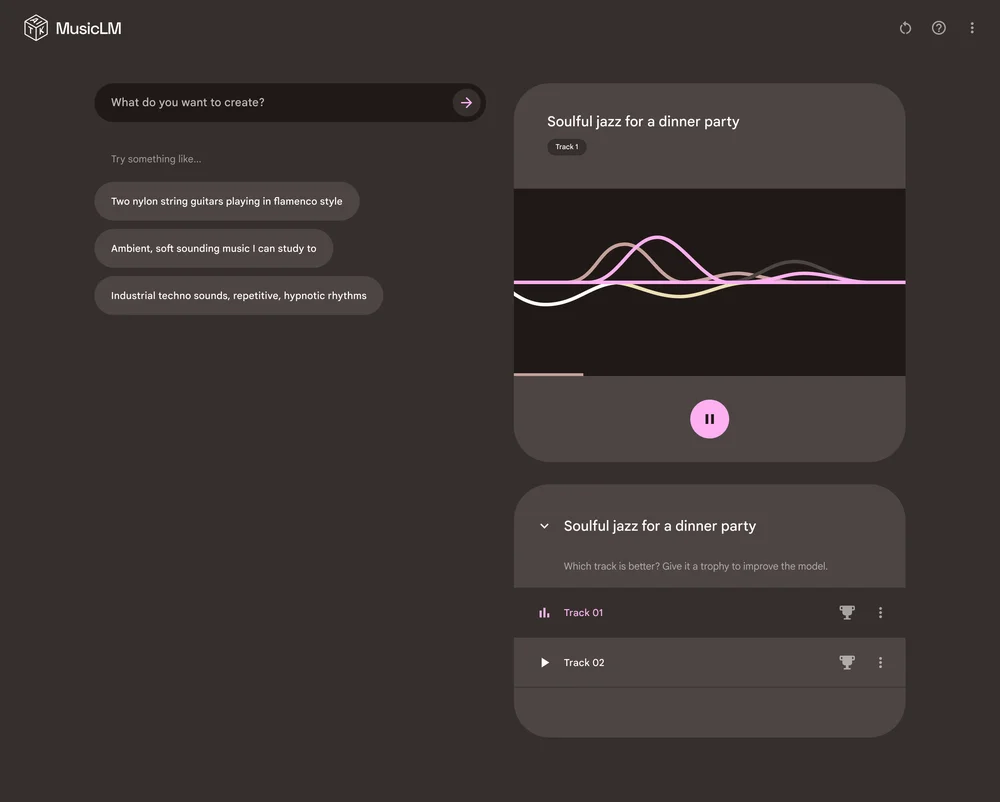

Google’s MusicLM transforms text descriptions into fully realized musical pieces. Using a massive training dataset of 280,000 hours of recorded music, it creates compositions based on your written prompts.

What makes MusicLM stand out is its three-layer approach to music generation. It processes sound in distinct aspects: matching words to music, handling large-scale composition, and creating small-scale details. This allows for remarkably coherent musical outputs that follow your specific instructions about genre, mood, and instrumentation.

The system is available through Google’s AI Test Kitchen app, though with limitations to avoid copyright issues.

MusicGen by Meta

Meta’s MusicGen has become a favorite for its balance of quality and accessibility. Built on a transformer model and trained on 20,000 hours of music, it can generate compositions from either text descriptions or existing melodies.

The open-source nature of MusicGen has been a game-changer, allowing researchers and developers to modify and improve the system. It uses Residual Vector Quantization to maintain high audio quality while keeping file sizes manageable.

Many users find MusicGen particularly strong at producing coherent musical structures, even with complex prompts, and sometimes outperforms other tools like Google’s MusicLM.

Project Music GenAI Control by Adobe

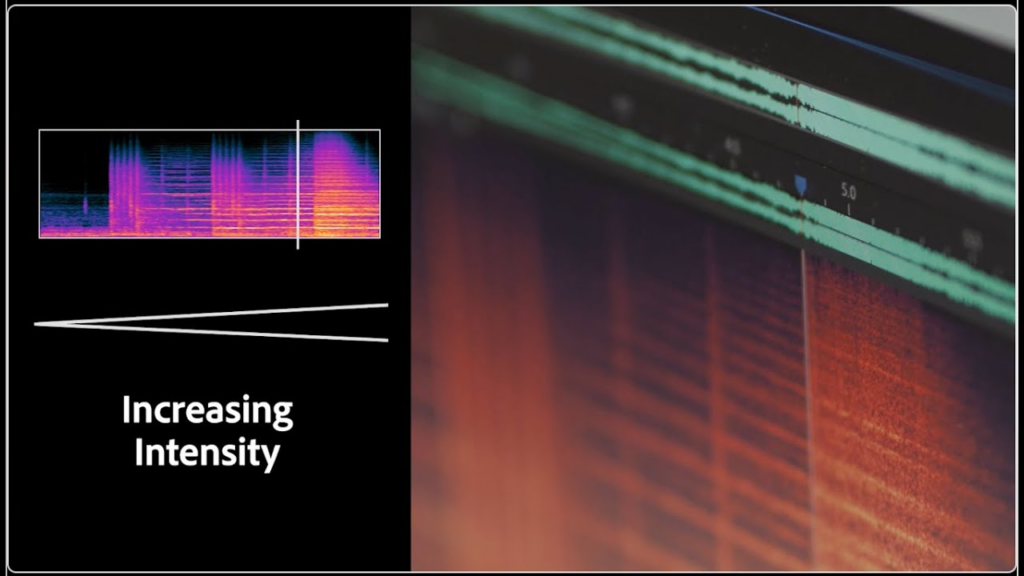

Adobe’s approach with Project Music GenAI Control focuses on giving users detailed editing capabilities over AI-generated music. While other systems primarily generate finished pieces, Adobe’s tool lets you fine-tune almost every aspect.

Users can adjust tempo, modify intensity at specific points, extend clip length, remix sections, and create seamless loops. This granular control makes it particularly valuable for content creators who need music that perfectly fits their visual projects.

Adobe has essentially created the audio equivalent of Photoshop, providing “pixel-level control” for music that transforms AI generation from a one-shot process to an interactive creative workflow.

Stable Audio 2.0 by Stability AI

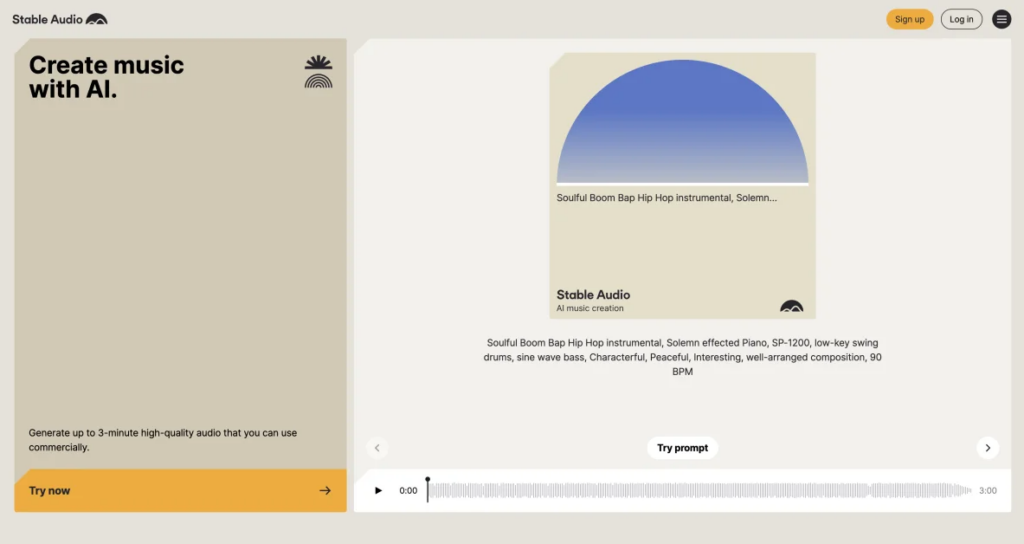

Stability AI’s Stable Audio 2.0 pushes technical boundaries by generating high-quality audio tracks up to three minutes long at 44.1 kHz stereo from a single text prompt.

Its standout feature is audio-to-audio generation, which lets you upload samples and transform them using text instructions. This makes it particularly valuable for remixing and sound design.

Trained on a licensed dataset from AudioSparx, Stable Audio uses a highly compressed autoencoder and diffusion transformer to recognize and reproduce complex musical structures. Its advanced content recognition technology also helps prevent copyright infringement.

Can you Generate Music Using ChatGPT or Other LLMs?

While ChatGPT and similar large language models were not built specifically as music tools, many content creators have found creative ways to use them in their music production processes. These text-based AI systems don’t create audio files directly, but they can still be valuable assistants for music.

Writing chord progressions

ChatGPT works well for generating chord progressions when given explicit instructions. Ask for a progression in a specific key, and you’ll get not just the chords but often the notes within each chord and roman numeral notation.

Simple requests like “Write a chord progression in C major” usually yield accurate results. It can even handle more complex requests, such as jazz chord progressions or secondary dominants.

The accuracy does drop when you request specific styles. When requesting progressions that sound like specific artists, ChatGPT sometimes misapplies music theory concepts or creates progressions that fall short stylistically.

Creating melodies

ChatGPT can describe melodies using various notation systems:

For basic melodies, this approach works reasonably well. You can ask for something like “a pentatonic melody in G minor using quarter notes and eighth notes,” and get a usable starting point.

The challenge comes when trying to align melodies with chord progressions. ChatGPT sometimes struggles to ensure notes work harmonically with underlying chords, occasionally suggesting combinations that clash.

Generating lyrics

Lyrics creation is where ChatGPT truly shines. You can request lyrics about specific themes, in particular styles, or even matching existing chord progressions.

Asking ChatGPT to “write lyrics for a folk song about leaving home” typically yields complete verses and choruses that capture the emotional essence of the genre.

You can also format these with chord symbols at appropriate points, creating lead sheets ready for performance.

A Better Alternative: Text-to-Music Tools

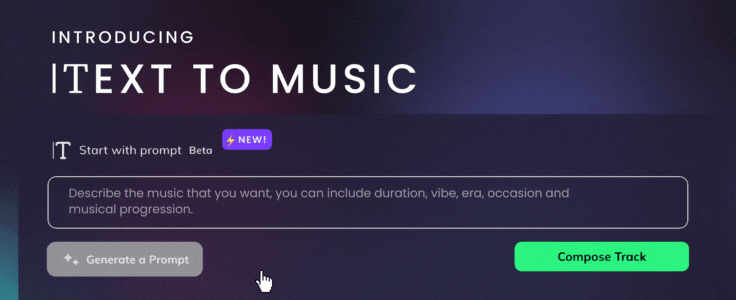

While ChatGPT offers these workarounds, dedicated text-to-music tools provide a much more streamlined experience. Beatoven.ai, for instance, has developed a Text-to-Music feature specifically designed for content creators.

Unlike the technical approaches needed with ChatGPT, Beatoven.ai lets you simply describe the music you want in plain language. For example, you might type “Compose fun music for a 1-minute cooking reel” and receive a complete, royalty-free track tailored to your needs.

The process is simple:

This approach eliminates the technical barriers of working with code or notation systems, making professional-quality music creation accessible to creators regardless of their musical background.

Conclusion

The rise of AI music generation models marks a significant shift in how we think about music creation. What used to require years of training, expensive equipment, and technical expertise is now accessible to anyone with an internet connection and an idea.

Traditional music production presents several challenges. It requires specialized knowledge of music theory, proficiency with complex software, and a significant time investment. These barriers have historically kept many creative people from expressing their musical ideas.

AI music generation tools directly address these limitations. Whether it’s Google’s MusicLM transforming text into full compositions, Meta’s MusicGen building songs from simple prompts, or Adobe’s Project Music GenAI Control offering detailed editing capabilities, these technologies are democratizing music creation in unprecedented ways.

Even language models like ChatGPT can assist with chord progressions, melodies, and lyrics, though they cannot produce actual audio. While these workarounds can spark creativity, they still require technical know-how to translate into usable music.

For content creators who need a straightforward solution, platforms like Beatoven.ai bridge this gap perfectly. Their Text-to-Music feature lets you simply describe what you want (“upbeat music for a travel vlog”) and receive a complete, royalty-free track in minutes. This approach removes technical barriers while still giving you creative control over the final result.

As these technologies continue to evolve, we’re moving toward a future where musical expression isn’t limited by technical skill but is instead driven purely by creative vision. The question is no longer whether AI can help create music, but how we’ll use these powerful new tools to expand the boundaries of human creativity.

Start using Beatoven.ai for free

FAQs

What is the AI model for music creation?

AI music models use neural networks trained on thousands of songs to generate new compositions. They include autoregressive systems that predict notes sequentially and diffusion models that refine noise into coherent music.

Which AI music generator is best?

The best AI music generator depends on your needs. MusicLM offers high-quality output, MusicGen is open-source, AudioCipher integrates with digital audio workstations (DAWs), while Beatoven.ai provides the simplest experience for content creators.

What is the AI for generating audio?

Audio-generating AI models transform text descriptions or existing audio into new musical compositions using neural networks. They can create everything from simple melodies to complete tracks with multiple instruments.

Sreyashi Chatterjee is a SaaS content marketing consultant. When she is not writing or thinking about writing, she is watching Netflix or reading a thriller novel while sipping coffee.